In an earlier post, I mention the value of visualizations as a means for exploring topic modeling data. That particular example used a small model of 276 poems labeled “ekphrastic†out of a much larger collection. At that point, I was still struggling with how to read the data, which felt overwhelming. How could I organize the relationships between topics and documents in such a way as to see salient connections produced by the model?  The intermediate solution was to break the model down into groups of 3 topics and create bar graphs charting the likelihood that each document contained language from each topic. That solution worked in the short-term, because it helped me to discover the fact that one topic was found highly likely within a particular volume of ekphrastic verse: John Hollander’s The Gazer’s Spirit.

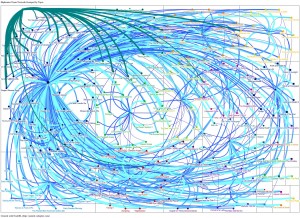

Still, what I wanted was an impressionistic overview of the documents’ association with all of the topics. The first 40 or so attempts at this process were a dismal failure. Partly because it was a learning process and partly because the results frequently resembled the much maligned “hairball,†what I produced was completely incomprehensible. However, August 20th to 24th I attended the NSF, Social Media Research Foundation, and Grand funded Summer Social Webshop on Technology-Mediated Social Participation. There, I met Marc Smith, who began developing NodeXL, a social media network analysis tool built to work with Microsoft Excel, while he worked for Microsoft Research. Marc, who now leads the Social Media Research Foundation and Connected Action  generously took time to demonstrate how to import my topic modeling data into NodeXL so that I could generate graphs that are more elegant and streamlined than any I’ve been able to produce to this point. The results aren’t just beautiful: they’re useful.

So, what are those results? They include unimodal and bimodal network graphs that visualize connections between documents with other documents, topics with other topics, and documents with topics created with an LDA model in MALLET. Using NodeXL’s algorithms, I am able to cluster groups with stronger ties in grid areas, assign them unique colors, and demonstrate the degree of probability the model calculates as a connection between nodes (either documents or topics depending on the graph). The real power of NodeXL, though, is that in the future I can make my data public through the NodeXL gallery, and you can download my network graph and play with it yourself. The data isn’t quite there yet, but that’s what’s coming.

In the meantime, I’ll offer the following image of a network graph that I had hoped to produce with my earlier post about The Gazer’s Spirit. Though the topic label is small, Topic 3 can be seen in the top left hand corner of the network diagram. The width and color of the edges in the diagram (meaning the width of the lines) is determined by the model’s estimation of how much of each topic is in each poem. If the lines are thicker and lighter, it means that the model estimates that a large portion of the poem draws its language from the corresponding topic. Similarly, the thinner and darker a line is the lower the probability that the poem includes language from the corresponding topic.

Table 1: Ekphrastic Dataset – 276 poems and 15 topics

Topic 3 (in the top, left-hand corner) is primarily comprised of connections to poems from The Gazer’s Spirit and is affiliated by language that reflects a kind of courtship, including archaic references (thy, thee, thou) and the language of love (er, beauty, grace, eyes, heaven, divine, hand, love). This makes sense in the context of existing knowledge about Hollander’s volume. The collection reads very much like a tribute to painting and the visual arts by poetry, and the language of desire is prevalent throughout. Moreover, both W.J.T. Mitchell and James A.W. Heffernan, two prominent theorists in the ekphrastic tradition, insist that the language of love and desire is a strong, if not dominant, discourse across all of ekphrasis based on a canon of poems mostly included in The Gazer’s Spirit. One might assume, then, that there would be strong connections between a topic comprised of the language of courtship, love, and desire and most of the poems in the collection; however, only a few of the poems with a statistically significant portion of its language from Topic 3 are not also in The Gazer’s Spirit: “The Picture of Little T.C. in a Prospect of Flowers,†“The Art of Poetry [excerpt],†“Ozymandius,†“Canto I,†and “My Last Duchess.â€Â Of those poems, none are by female poets.

|

Poems with highest proportion of Topic 3 |

| The Temeraire (Supposed to Have Been Suggested to an Englishman of the Old Order by the Flight of the Monitor and Merrimac) by Herman Melville |

| To my Worthy Friend Mr. Peter Lilly: on that Excellent Picture of His majesty, and the Duke of York, drawne by him at Hampton-Court by Sir Richard Lovelace |

| From The Testament of Beauty, Book III by Robert Bridges |

| For Spring By Sandro Botticelli (In the Academia of Florence) by Dante Gabriel Rosetti |

| To the Statue on the Capitol: Looking Eastward at Dawn by John James Piatt |

| The Poem of Jacobus Sadoletus on the Statue of Laocoon by Jacobus Sadoleto |

| To the Fragment of a Statue of Hercules, Commonly Called the Torso by Samuel Rogers |

| The Last of England by Ford Maddox Brown |

| On the Group of the Three Angels Before the Tent of Abraham, by Rafaelle, in the Vatican by Washington Allston |

| Death’s Valley To accompany a picture; by request. “The Valley of the Shadow of Death,” from the painting by George Inness by Walt Whitman |

| Elegiac Stanzas Suggested by a Picture of Peele Castle, in a Storm, Painted by Sir George Beaumont by William Wordsworth |

| On the Medusa of Leonardo da Vinci in the Florentine Gallery by Percy B. Shelley |

| The Mind of the Frontispiece to a Book by Ben Jonson |

| Venus de Milo by Charles-Rene Marie Leconte de Lisle |

| The City of Dreadful Night by James Thomson |

| Sonnet by Pietro Aretino |

| For “Our Lady of the Rocks” By Leonardo da Vinci by Dante Gabriel Rosetti |

| Mona Lisa by Edith Wharton |

| Ode on a Grecian Urn by John Keats |

| The National Painting by Joseph Rodman Drake |

| The “Moses” of Michael Angelo by Robert Browning |

| Hiram Powers’ Greek Slave by Elizabeth Barrett Browning |

| From Childe Harold’s Pilgrimage, canto 4 by George Byron Gordon |

| The Picture of Little T. C. in a Prospect of Flowers by Andrew Marvell |

| Before the Mirror (Verses written under a Picture)Inscribed to J. A. Whistler by Algernon Charles Swinburne |

| For Venetian Pastoral By Giorgone (In the Louvre) by Dante Gabriel Rosetti |

| The Art of Poetry [excerpt] by Nicolas Boileau-Despreaux |

| Ozymandias by Percy B. Shelley |

| The Iliad, Book XVIII, [The Shield of Achilles] by Homer |

| Canto I by Dante Alighieri |

| The Hunter in the Snow by William Carlos Williams |

| Tiepolo’s Hound by Derek Wallcot |

| St. Eustace by Derek Mahon |

| Three for the Mona Lisa by John Stone |

| My Last Duchess by Robert Browning |

Table 2: Ekphrastic Dataset 15 Topic Model, Topic 3 Highlighted

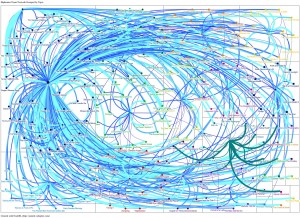

The only remaining topic which includes the word love fairly high in the key word distribution is Topic 4, which includes the following terms: portrait, monument, foreman, felt, woman, monuments, box, press, bacall, detail, young, thick, crimson, instrument, hotel, compartment, picked, cornell, Europe, lovers. As you can see from the network diagram below, none of the topics with high probabilities of containing Topic 3 are included in the Topic 4 distribution.

Table 3: Ekphrastic Dataset 15 Topic Model, Topic 4 Highlighted

Equally interesting, poems with the highest proportion of Topic 4 are also authored by female poets. Â Certainly, more poems by men include significant proportions of Topic 4 than poems by women that include significant portions of Topic three; however, there are striking and salient points to be made about the contrasting networks:

|

Poems with highest proportion of Topic 4 |

| “Utopia Parkway” after Joseph Cornell’s Penny Arcade Portrait of Lauren Bacall, 1945 – 46 by Linda Hull |

| Canvas and Mirror by Evie Shockley |

| Portrait of Madame Monet on Her Deathbed by Mary Rose O’Reilley |

| Internal Monument by G. C. Waldrup |

| The Uses of Distortion by Caroline Crumpacker |

| Joseph Cornell, with Box by Michael Dumanis  |

| Drawing Wildflowers by Jorie Graham |

| The Eye Like a Strange Balloon Mounts Toward Infinity by Mary Jo Bang |

| Visiting the Wise Men in Cologne by J.P. White |

| Rhyme by Robert Pinksy |

| The Street by Stephen Dobyns |

| The Portrait by Stanley Kunitz |

| “Picture of a 23-Year-Old Painted by His Friend of the Same Age, an Amateur” by C.P. Cavafy |

| Portrait in Georgia by Jean Toomer |

| For the Poem Paterson [1. Detail] William Carlos Williams |

| The Dance by William Carlos Williams |

| Late Self-Portrait by Rembrandt by Jane Hirshfield |

| Sea Life in St. Mark’s Square by Mary O’Donnell |

| Washington’s Monument, February, 1885 by Walt Whitman |

| Still Life by Jorie Graham |

| Still Life by Tony Hoagland |

| The Family Photograph by Vona Groarke |

| The Corn Harvest by William Carlos Williams |

| Portrait of a Lady by T. S. Eliot |

| Portrait d’une Femme by Ezra Pound |

This impressionistic overview of the ekphrastic dataset prompted through the exploration of a network graph of the relationships between topics and poems is a first step. Enough, perhaps, to formulate a new hypothesis about the difference between “love†and “lovers†in ekphrastic poetry, or to lend further support to the growing sense that there is a much broader range of kinds of attraction and kinship—a range inclusive of both competitive and kindred discourses—than previous theorizations of the genre have taken into account.  The network visualization goes further than to suggest that there are two very different discourses regarding love and affection in ekphrastic verse, but even suggests possible poems to consider reading closely to see what those differences might be and if they are worth pursuing further.  Through the use of networked relationships between topics and documents, we begin with lists of poems in which the discourse of affinity, affection, and desire—as courtship or as partnership—can be further explored through close readings.

Meeting Edward Tufte’s claim that evidence should be both beautiful and useful, the NodeXL network diagrams of LDA data are a step toward developing methods of evaluating and exploring models of figurative language that do not necessarily fit the same criteria for models of non-figurative texts.